Liquid Cooling for Data Centers: The Key to Unleashing AI and High-Density Computing

Team Expert Thermal2024-12-13T15:28:17+00:00As AI-driven data centers and high-density computing systems power today’s technological advancements, the demand for efficient data center cooling solutions is rising rapidly. Traditional air-cooling technologies fall short in handling the extreme heat produced by AI accelerators, GPUs, and CPUs, creating an urgent need for advanced liquid cooling systems.

Enter liquid cooling — a revolutionary technology that is 1,000 times more efficient at removing heat compared to traditional air-cooling systems. This blog takes you on a deep dive into the world of liquid cooling, explaining its components, types, and how it’s revolutionizing modern data centers.

From Direct-to-Chip (D2C) cooling to Rear-Door Heat Exchangers (RDHx) and Immersion Cooling, each system offers a unique approach to taming high thermal loads. As server power density exceeds 100 kW per rack, adopting liquid cooling solutions is essential for optimal performance in high-density data centers.

Discover how industry leaders like HPE, Dell, Lenovo, and Supermicro are leveraging liquid cooling to improve performance, reduce energy consumption, and achieve up to 50% operational cost savings. Learn about essential components like Coolant Distribution Units (CDUs), Cold Plates, and Manifolds, as well as 3D printing’s role in designing custom cooling solutions.

This blog explores the key challenges associated with adopting liquid cooling, including cost and complexity. It also highlights advanced innovations such as hybrid cooling and emerging two-phase cooling technologies that are shaping the future of thermal management in AI-driven data centers.

Ready to future-proof your data center? Discover how liquid cooling enhances hardware reliability, minimizes carbon footprints, and enables denser, quieter, and more sustainable operations. This comprehensive guide explains why transitioning to liquid cooling is essential for modern AI-powered infrastructure.

Introduction

As data centers evolve to support the growing demands of high-density computing, AI, and the rapid expansion of data, the need for robust cooling systems becomes increasingly urgent. The power consumption and heat generation of modern data center components have reached unprecedented levels, pushing air-cooling to its limits. To meet these rising challenges, liquid cooling has emerged as a game-changing technology, offering a more efficient and scalable solution. This blog post explores the world of liquid cooling, highlighting its components, benefits, and challenges, with a focus on AI accelerators, 1U servers, and the latest advancements in the field.

The Limits of Air Cooling

For decades, traditional air-cooling has been the standard method for cooling data center equipment. This approach relies on drawing cooler air from the cold aisle over hot CPUs and GPUs, which is significantly influenced by the inlet air temperature and the volume of airflow through the server rack and heat sinks. Key factors, such as inlet air temperature and the airflow rate (measured in cubic feet per minute, or CFM), play a critical role in ensuring optimal thermal performance and maintaining efficient clock speeds.

Despite its widespread use, air-cooling faces significant limitations due to air’s low heat transfer efficiency and limited heat capacity. This method demands substantial energy for computer room air conditioning and continuous fan operation. Furthermore, physical constraints on the size of internal fans within server chassis, typically measured in rack units (1U = 1.75 inches), restrict the available space for fans. Servers are designed to fit a specific number of U, which means that 1U servers often outnumber 2U servers, further complicating airflow management.

To meet the increasing airflow requirements driven by higher heat dissipation densities, data centers require more fans strategically placed within servers. As thermal design power (TDP) of GPUs and CPUs rises, larger and more efficient fans are needed to overcome the increased flow impedance caused by denser heat sinks.

For example, a rack housing 72 Nvidia B200 GPUs can consume around 120 kW—ten times the power of a typical rack. This surge in power consumption underscores the urgent need for more efficient cooling solutions to prevent overheating and ensure optimal performance.

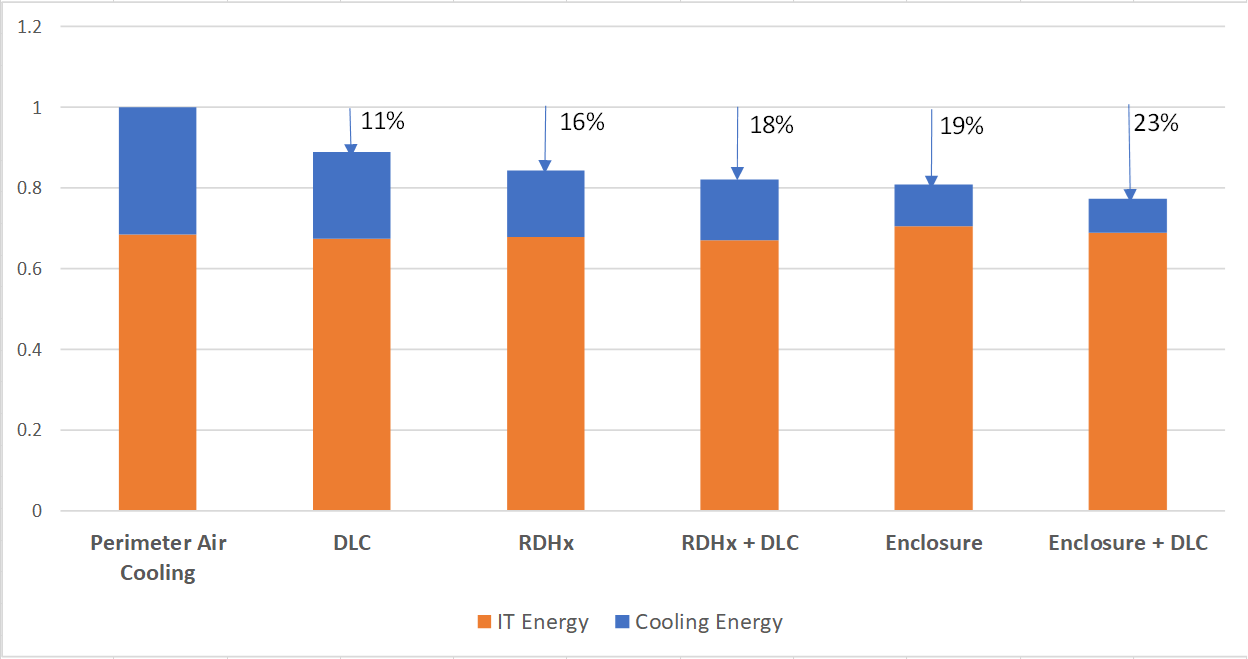

Limits of Air Cooling and the Advantages of Liquid Cooling

Higher Heat Loads: Air-cooling struggles to cope with the increasing heat loads generated by modern IT equipment, especially when dealing with high-density racks and powerful AI accelerators. Liquid cooling systems offer a solution that is up to 1000 times more efficient at dissipating heat compared to air-cooling.

Energy Efficiency: By reducing or eliminating the reliance on fans, liquid cooling solutions drastically lower energy consumption compared to traditional air-cooling. These systems maintain optimal operating temperatures, cut operational expenses (OPEX) by over 40%, improve power usage effectiveness (PUE), and contribute to a smaller carbon footprint.

Higher Density: Liquid cooling enables denser deployments of IT equipment, making better use of available data center space. This increased density allows data centers to scale more efficiently without compromising system performance.

Quiet Operation: Compared to air-cooled systems, liquid cooling operates much more quietly. This reduction in noise creates a more pleasant and less disruptive working environment for both employees and sensitive equipment.

Reliability and Longevity: Efficient heat management through liquid cooling extends the lifespan of critical IT components, minimizing the risks of overheating and system failures. This results in more reliable, durable systems and significantly reduced maintenance costs.

Faster ROI: Liquid cooling investments typically pay off in less than a year. With significant energy savings and performance improvements, the return on investment is fast, making it a highly cost-effective solution for modern data centers.

Liquid Cooling:

The world has witnessed a rapid growth in processing power and packaging density for both central processing units (CPUs) and graphical processing units (GPUs). Today’s processors have tripled in power, reaching up to 500W for CPUs and over 1000W for GPUs. Consequently, total rack power has surged from around 15kW to beyond 100kW, with required device temperatures dropping from the high 80s°C to approximately mid 50s°C. Liquid cooling has become essential to reliably and efficiently cool platforms aligned with silicon vendor roadmaps.

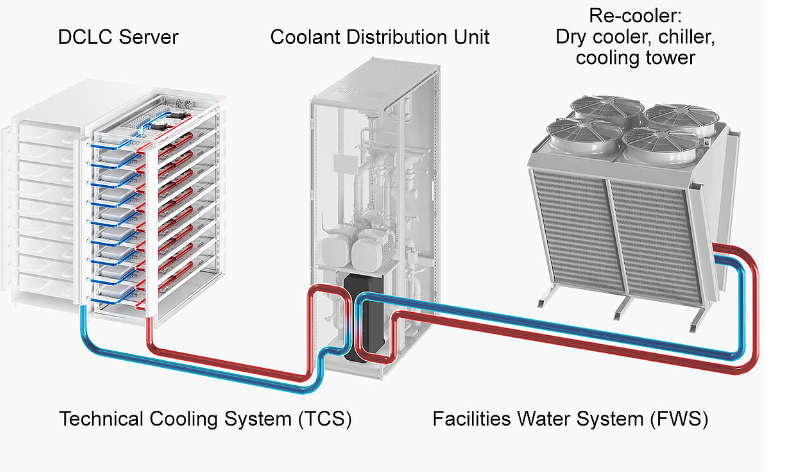

Liquid cooling stands out as an advanced thermal management system, leveraging liquid coolants to effectively dissipate heat from high-power components via cold plates. The heat is then transferred to the ambient environment through liquid-to-air or liquid-to-liquid heat exchange systems. Unlike air-cooling, which relies on moving air through heat sinks to transfer heat to the surrounding air, liquid cooling circulates coolant through a network of tubes and heat exchangers, where the heat is efficiently transferred to the outside environment. This process is significantly more efficient and capable of managing much higher heat loads, making it an ideal solution for data centers. The typical liquid flow rate per kilowatt is estimated at 1.5 liters per minute/kW, though this value can vary depending on the heat transfer effectiveness of the cold plates and the heat produced by the liquid-cooled IT components. This measurement varies on the heat transfer effectiveness of the cold plate and the heat produced by the liquid-cooled components. Three prominent cooling solutions—Direct-to-Chip (D2C) cooling, Rear Door Heat Exchanger (RDHx), and Immersion Cooling—offer different approaches depending on infrastructure, heat loads, and operational goals. Here’s a breakdown of each method to help data centers choose the best fit for their needs.

- Direct-to-Chip (D2C) Cooling

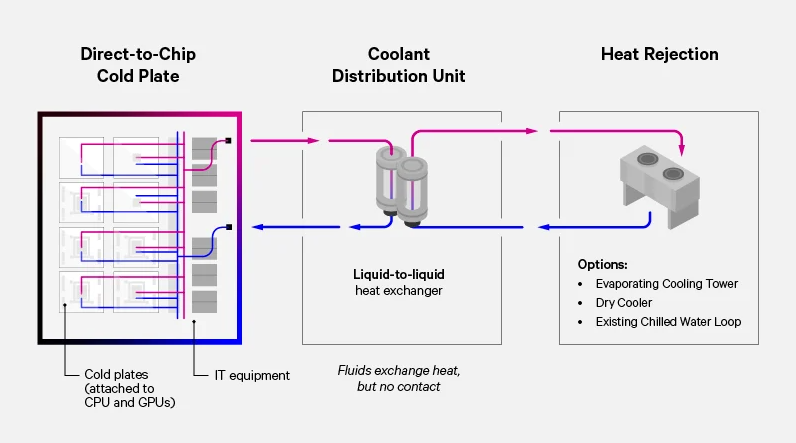

Direct-to-Chip cooling (D2C) is a highly efficient liquid cooling method that removes heat directly from components like CPUs and GPUs. This system circulates dielectric fluid through cold plates to heat exchangers, where the heat is effectively dissipated into the ambient environment. D2C systems support both single-phase and two-phase cooling processes, making them versatile for various data center cooling needs.

In a two-phase system, the coolant absorbs heat, vaporizes, and carries the heat to the heat exchanger, where it condenses and is then transferred outside the rack, ensuring efficient heat rejection.

Figure 1. [Understanding Direct-to Chip]

Figure 1. [Understanding Direct-to Chip]

A key component of Direct-to-Chip (D2C) cooling is the in-rack Cooling Distribution Unit (CDU), a critical device designed to manage liquid cooling for individual servers or entire racks. With the ability to dissipate up to 2.3 MW of heat, modern CDUs are highly effective for handling the increasing thermal loads of today’s high-performance data centers.

While D2C cooling offers exceptional heat dissipation capabilities, it does require additional infrastructure, such as dedicated space for CDUs, which can limit compute density in server racks. For greater flexibility, the hot liquid can be redirected to external cooling systems, making it ideal for scalable data center setups and environments with higher cooling demands. Heat Load Capacity: 20 to 80 kW per rack

- Rear Door Heat Exchanger (RDHx)

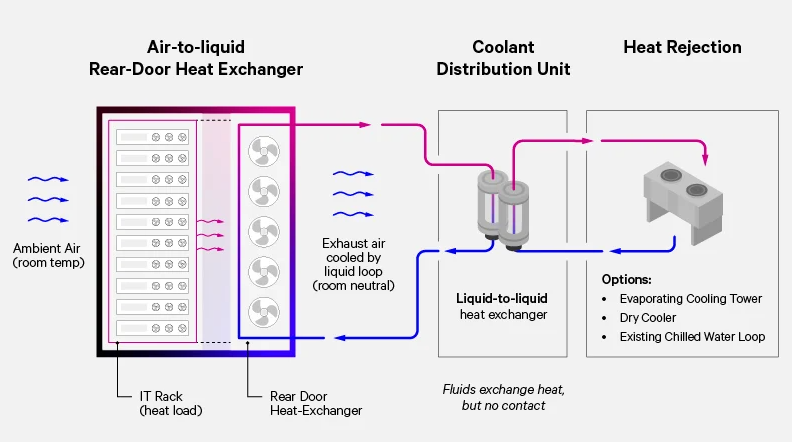

For data centers that cannot easily modify their infrastructure but still need effective cooling, RDHx offers a practical solution. RDHx achieves up to 100% of heat removal and represents an ideal solution for “hybrid liquid cooling.” In this method, a specialized rear door with built-in fans and coolant is attached to the back of the server rack. As hot air from the servers exits through the back, the RDHx system immediately cools the air, returning cooler air to the data center.

Figure 2. [Rear-Door Heat Exchanger]

Figure 2. [Rear-Door Heat Exchanger]

RDHx is particularly useful for facilities where altering the rack layout is not possible, and it integrates smoothly into existing systems without the need for significant upgrades. Although it doesn’t cool the CPU directly like D2C, it is highly effective in reducing overall rack temperatures, ensuring stable performance across the data center. RDHx are low-maintenance, flexible solutions that efficiently manage heat rejection and cooling within high-performing data centers. RDHx can cut cooling energy by 70-80% at the rack level, and 40-50% overall in the data center.

Data centers require adaptable and scalable cooling solutions to meet evolving needs. A hybrid approach combining liquid and air-cooling provides this flexibility. Liquid cooling can be applied in high-density zones where heat dissipation is most demanding, while air-cooling is well-suited for managing lower heat loads. This strategy allows data center operators to optimize cooling efficiency, adjust to fluctuating workloads, and ensure effective use of resources.

Heat Load Capacity: Up to 20-35 kW per rack.

- Immersion Cooling

Immersion is a cutting-edge solution designed for data centers managing extremely high heat loads or operating in space-constrained environments. In this method, entire servers are submerged in a non-conductive, non-corrosive liquid that directly absorbs heat from the components. As the liquid heats up, it rises, and the hot liquid is pumped to an external cooling system for chilling.

This advanced liquid cooling technique is particularly effective for high-performance workloads and facilities where space optimization and cooling efficiency are essential. With the ability to manage heat loads exceeding 45 kW per rack, immersion cooling is the ideal choice for dense data center environments and specialized applications requiring superior thermal management and compact design.

Heat Load Capacity: 40 to 100+ kW per rack

Components of Liquid Cooling Systems

- Coolant Distribution Units (CDUs)

CDUs are vital components in advanced liquid cooling systems for data centers. They serve as the control hub, managing the distribution of coolant to maintain ideal temperature and pressure levels. This precise regulation is essential for ensuring the optimal performance and longevity of server cold plates, while also maximizing the overall stability and efficiency of the cooling system. By improving energy efficiency and cooling capacity, CDUs help to significantly lower operational costs in high-density computing environments.

Function: CDUs regulate the distribution of cooling liquid, maintaining the temperature and pressure within the required parameters. One of the most important tasks of a CDU is maintaining the coolant temperature above the dew point, preventing condensation and safeguarding the equipment from moisture-related issues. Beyond basic cooling, CDUs contribute significantly to system reliability. They incorporate fine filtration systems, often with 50-micron filters, to prevent any contamination from affecting the coolant and server cold plates.

Operation: CDUs create an isolated secondary loop from the chilled water supply, ensuring that the coolant remains within the optimal range. They also prevent contamination and ensure the longevity of the cooling system.

Placement: CDUs can be placed in various locations, including in-rack, in-row, or centralized, depending on the desired level of redundancy and efficiency.

Figure 3. Coolant Distribution Units

Figure 3. Coolant Distribution Units

CDUs (Coolant Distribution Units) play a vital role in direct-to-chip cooling systems, rear-door heat exchangers (RDHx), and immersion cooling setups. By optimizing heat transfer and maintaining stable cooling conditions, CDUs are essential for modern liquid-cooled data centers, ensuring energy-efficient operation and extending the lifespan of critical hardware.

There are three main types of CDUs: liquid-to-liquid, liquid-to-air, and liquid-to-refrigerant. Each type has its own advantages and disadvantages, which we will explore below.

- Liquid-to-Liquid CDU: This type of CDU uses a separate cooling loop for IT equipment, keeping it isolated from the facility’s main chilled water system. It requires a continuous operation of the chilled water system and prioritizes water quality through filtration. However, it may affect deployment timelines due to the need for installing pipes and pumps.

- Liquid-to-Air CDU: This type of CDU provides an independent secondary fluid loop to the rack, dissipating heat from IT components even without chilled water access. It’s a great option for speeding up liquid cooling deployment, as it utilizes existing room cooling units for heat rejection.

- Liquid-to-Refrigerant CDU: This type of CDU uses direct expansion (DX) refrigerant-based for heat rejection and direct-to-chip cooling. It maximizes the existing DX infrastructure while enhancing liquid cooling capacity where needed. It’s a great option for data centers that don’t have onsite chilled water, enabling modular setups without completely overhauling the existing cooling infrastructure.

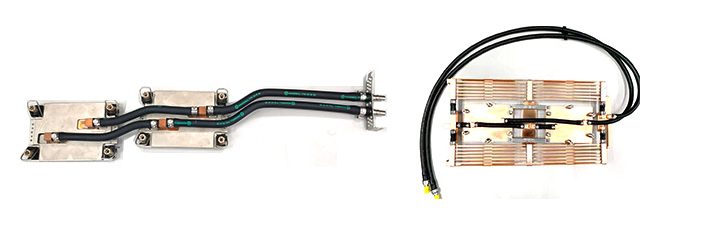

- Cold Plates

Cold Plates are state-of-the-art thermal management devices engineered to efficiently remove heat from high-performance components, including CPUs and GPUs. They feature integrated tubing or flow channels through which liquid coolant flows directly, absorbing heat from the component. Positioned on the heat source, cold plates provide a direct, effective path for heat transfer to the coolant, helping maintain optimal temperatures and ensuring stable operation of critical electronics.

Figure 4. [Delta Cold Plate Loops]

Figure 4. [Delta Cold Plate Loops]

Types and Designs of Cold Plates

Cold plates are designed to handle a variety of cooling fluids, utilizing either single-phase or two-phase cooling systems. The design is often tailored to maximize performance based on the specific cooling fluid, with examples ranging from simple metal blocks with embedded piping to sophisticated models with micro-channels for enhanced thermal performance. These micro-channels, either skived or molded, direct the cooling fluid across the plate, improving the heat exchange surface area and enhancing cooling efficiency.

Cold plates can be classified into two main types:

- 1-Piece Cold Plates: These integrate the fluid heat exchanger and retention bracket into a single unit, providing a robust but inflexible solution for component upgrades.

- 2-Piece Cold Plates: Here, the retention bracket is a separate component, allowing the heat exchanger to be reused across processor generations by updating only the bracket, making them a cost-effective choice.

Figure 5. [1-Piece and 2-Piece Cold Plates]

Key Components of a Cold Plate Assembly

Cold Plate Base and Top Cover: The base contacts the processor with an applied thermal interface material (TIM2) to improve heat transfer, while the top cover encloses the fluid channels and integrates fluid connectors for a secure, leak-free operation.

Fluid Flow Channels: These channels are the core of the cold plate’s cooling function, transporting the coolant through a series of engineered pathways between the base and cover. This setup ensures efficient heat dissipation from the processor directly to the cooling fluid.

Manufacturing Process: Channeled Cold plates are manufactured by skiving, EDM.

- The skiving process is a continuous process that is achieved by cutting/shaving slices of material to a finned structure. The spacing and profile of the fins are limited as in the case of skived heat sinks and requires post grinding, polishing and additional machining. Typical footprint: (30-75) mm x (30-75) mm x (6-22) mm, Production lead time: 10 weeks, Batch size: 4000-5000, min thickness: 0.1 mm, tolerance: +/-0.02 mm

- EDM is a controlled removal of metal by electric spark erosion. This process can generate high accuracy and doesn’t require any mechanical force to achieve the desired profile. The electric discharge vaporizes the material and extreme intricacy, narrow slots and fragile outlines can be achieved. EDM is not suited for high volume and is typically a very slow process with high power consumption with constant feed of dielectric solution. The tool/electrode is subjected to high erosion and requires constant replacement. Typical footprint: (10-25) mm x (10-25) mm, Production lead time: 10 weeks, Batch size: 1500-2000, min thickness: 0.072 mm, accuracy: +/-0.0025 mm

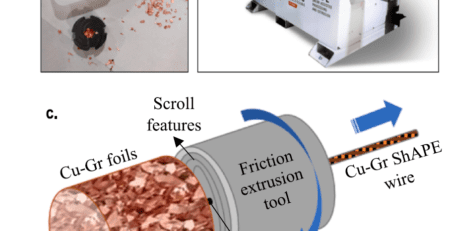

With the rise of 3D printing (3DP) technology, a new frontier has emerged in designing and manufacturing liquid cold plates. This innovation allows engineers to create custom, application-specific geometries tailored to precise thermal performance requirements, offering unparalleled design flexibility. By utilizing additive manufacturing (AM) processes, complex shapes with optimized fin spacing, similar to skived heat sinks, can be created while minimizing material waste—a crucial factor in sustainable manufacturing. But how far can 3D printing truly go in reshaping the landscape of thermal management?

Key Advantages of 3D Printing for Heat Sink Design

- Enhanced Design Flexibility and Sustainability: 3D printing allows the creation of custom heat sink profiles (Gyroid) that match specific thermal performance goals. Its additive process not only minimizes waste but also improves supply chains and extends product lifecycles. Are there specific supply chain improvements that experts foresee with 3D printing compared to traditional manufacturing?

- Lattice Structures for Optimized Strength and Weight: By using lattice structures, heat sinks can achieve an impressive strength-to-weight ratio, reducing material use without compromising durability. This is especially beneficial for lightweight applications where both strength and efficiency are paramount. What are the challenges in designing lattice structures for thermal applications, and how do this impact performance?

- Microchannel Cold Plates with Improved Feature Sizes: Cold plates can benefit significantly from 3D printing. With achievable feature sizes of 100 μm for fin thickness, 680 μm height, and 100 μm spacing, 3D printing offers an opportunity to improve microchannel designs with reduced thickness (down to 50 μm). This not only enhances heat dissipation but also cuts material costs by eliminating the need for traditional tooling.

- Reduction in Material and Manufacturing Steps: The need for complex, multi-step operations in conventional metalwork is effectively eliminated with 3D printing. Complex designs that once required multiple processes can now be produced in a single manufacturing step, thus reducing cost and production time. Could these streamlined steps eventually support full production scalability?

- Size, Weight, and Volume Optimization: By incorporating lightweight design options, 3D-printed cold plates not only improve performance but also decrease overall system size, weight, and volume—leading to substantial cost savings. How does this optimization affect long-term reliability, especially in extreme operating environments?

The Open Questions on 3D Printing for Thermal Solutions

While 3D printing offers numerous advantages, it is essential to address some of the technology’s limitations:

- Porosity Challenges: Porosity can be a major concern, as it impacts the thermal and structural integrity of liquid cold plates. How can we address porosity in 3D-printed metal components, and are there post-processing methods that improve reliability?

- Full Assembly-Free Cold Plates: Could we reach a point where a 3D-printed cold plate eliminates the need for assembly? This would be revolutionary in simplifying design and production, but how feasible is it given current 3D printing capabilities?

- Suitability for Mass Production: Finally, is 3D printing ready for high-volume production of thermal components? The adaptability and customization are undeniable, but the costs and scalability challenges remain. Would mass production compromise the quality benefits achieved through 3D printing?

These questions open the door for industry experts to weigh in on the viability of 3D printing as a mainstream solution for high-performance thermal management. As we advance, could the combination of microchannel and lattice designs unlock an even greater potential for sustainable, high-efficiency heat sinks? 3D printing continues to challenge traditional boundaries, and its future role in thermal design is sure to provoke thoughtful debate and innovative solutions

- Coolant Distribution Manifolds (CDMs)

Function: CDMs distribute the coolant to multiple cold plates, ensuring uniform cooling across all components.

Types: CDMs can be horizontal or vertical, depending on the server configuration.

Figure 6. [Motivair Coolant Distribution Manifolds]

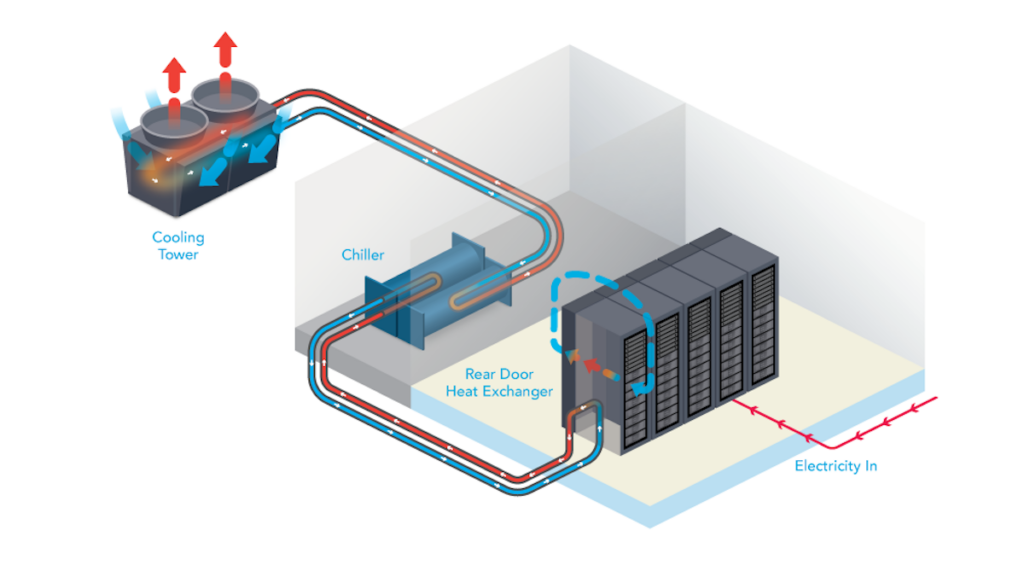

- Cooling Tower

Cooling towers are a critical component of liquid cooling systems in data centers, specifically designed to reject heat from the coolant to the ambient environment. By dissipating the heat generated by high-performance computing systems, they maintain the optimal operation of data center infrastructure. These towers effectively transfer heat absorbed by the coolant from servers and other equipment into the surrounding air or water, preventing overheating and ensuring operational reliability.

Types of Cooling Towers

Modern cooling towers in data center environments are often modular, providing exceptional design flexibility and scalability. These modular cooling towers are particularly beneficial for direct liquid cooling (DLC) systems. DLC systems, which extract heat directly from high-performance components such as CPUs and GPUs using liquid coolant, leverage the customizable design of modular cooling towers. These towers can be tailored to meet the specific cooling needs of individual racks or zones, enhancing energy efficiency and minimizing operational costs.

Benefits of Cooling Towers in Liquid Cooling Modules

Energy Efficiency: By leveraging ambient air and water resources, cooling towers minimize the reliance on energy-intensive refrigeration systems.

Scalability: Modular designs allow data centers to expand cooling capacity as needed, accommodating growth without significant infrastructure overhauls.

Sustainability: With proper design, cooling towers can utilize water in a closed-loop system, reducing environmental impact and conserving resources.

Enhanced Performance: By effectively rejecting heat, cooling towers ensure the coolant temperature remains low, enabling DLC systems to operate at peak efficiency and maintain server reliability.

Cooling towers play a crucial role in hybrid cooling systems, working alongside chillers, economizers, and other thermal management solutions. In warm climates, cooling towers use evaporative cooling to boost heat rejection capabilities and ensure efficient operations. Conversely, in cooler regions, they switch to free cooling modes, drastically reducing the Power Usage Effectiveness (PUE) of the data center. This adaptability makes them a cornerstone of modern data center thermal management strategies.

By incorporating modular cooling towers into liquid cooling systems, data centers can achieve high-density computing capabilities while addressing sustainability and energy consumption challenges, making them a cornerstone of modern thermal management strategies.

Figure 7. [Defining Liquid Cooling in the Data Center]

- Pumps

Pumps are the lifeline of liquid cooling systems in data centers, responsible for circulating the coolant through the network of pipes, heat exchangers, and cooling towers. Their primary function is to ensure efficient and continuous heat transfer from the data center equipment to the heat rejection components. By maintaining the desired flow rate, pumps enable direct liquid cooling (DLC) systems to dissipate the heat generated by servers, GPUs, and other high-performance components effectively.

Types and Selection of Pumps

The type and size of pumps used in a liquid cooling system are carefully selected based on several key factors:

System Capacity: Larger data centers with higher cooling loads require pumps with greater flow rates and pressure capabilities to handle the increased volume of coolant.

Flow Rate Requirements: The flow rate is critical to ensuring adequate heat transfer. High-performance servers may demand higher flow rates to maintain optimal cooling efficiency.

Pressure Drops: The system design, including pipe length, bends, and restrictions, determines the pump’s pressure head requirements.

Common types of pumps in data center liquid cooling systems include:

Centrifugal Pumps: Known for their reliability and efficiency, these pumps are widely used in large-scale cooling systems.

Variable Speed Pumps: These pumps adjust their speed based on the cooling demand, offering significant energy savings and operational flexibility.

Magnetic Drive Pumps: Used in systems requiring leak-free operation, these pumps are ideal for high-reliability environments.

Pumps are often integrated with control systems to monitor and manage flow rates, pressures, and temperatures dynamically. This ensures the liquid cooling system operates at peak efficiency while preventing issues such as cavitation or pump wear. In systems with multiple cooling loops, pumps play a critical role in managing the primary and secondary loops, optimizing heat transfer between servers and heat rejection systems like cooling towers or dry coolers.

The Open Compute Project (OCP) organization has begun to leverage the guidelines documented by ASHRAE and extend the guidelines further to include design collateral for server reference designs in 2023. The expanded collateral produced by OCP includes items such as liquid loop design, cold plate designs and quick connect design.

The OCP has also focused on categorizing the facility water loop based on delivered fluid temperatures into three groups:

- Group 1: High (40-45°C)

- Group 2: Medium (30-37°C)

- Group 3: Low (15-25°C)

Quality Considerations in Direct Water-Cooling Systems

Direct water-cooling systems offer significant advantages in terms of thermal performance and energy efficiency. However, maintaining the quality of the cooling fluid is crucial to ensure long-term reliability and optimal performance.

Common Challenges

Scaling: The accumulation of mineral deposits on cooling surfaces can reduce heat transfer efficiency.

Fouling: The buildup of organic matter and other contaminants can impede fluid flow and promote corrosion.

Corrosion: Chemical reactions between the cooling fluid and system components can lead to material degradation and system failure.

Microbiological Growth: The proliferation of microorganisms can cause fouling, corrosion, and system contamination.

Preventive Measures

To mitigate these challenges, several preventive measures should be implemented:

Water Quality Control:

Water Treatment: Employ appropriate water treatment techniques, such as filtration, softening, and chemical dosing, to remove impurities and maintain water quality.

Regular Water Testing: Conduct regular water quality tests to monitor parameters like pH, conductivity, and microbial content.

Particulate Filtration for Direct-to-Chip Cooling: Early high-performance computing (HPC) systems utilized cold plates with 100µ micro-channels to deliver cooling liquid directly to the chip. Over time, advancements in technology have reduced the size of these channels to 50µ, and the latest generation of GPUs now features 27µ micro-channels. As a result, particulate filtration at 25µ or finer is essential to prevent blockages. Ensuring the cleanliness of the secondary fluid network is crucial when implementing direct-to-chip liquid cooling, as even microscopic contaminants can compromise thermal performance and system reliability.

System Design and Materials Selection:

Material Compatibility: Choose materials that are resistant to corrosion and compatible with the cooling fluid.

Flow Optimization: Design the cooling system to ensure adequate fluid flow and minimize stagnant areas.

Effective Filtration: Implement high-quality filtration systems to remove particulate matter and prevent fouling.

Maintenance and Monitoring:

Regular Inspection: Conduct routine inspections to identify and address potential issues early.

Cleaning and Flushing: Periodically clean and flush the system to remove contaminants and prevent buildup.

Biocide Treatment: Implement a regular biocide treatment program to control microbial growth.

Temperature Monitoring: Monitor the temperature of critical components to ensure optimal operating conditions.

By carefully considering these factors and implementing appropriate preventive measures, it is possible to maintain the long-term reliability and efficiency of direct water-cooling systems.

Applications of Liquid Cooling

AI Accelerators

Artificial intelligence (AI) and machine learning (ML) have revolutionized the way we live and work, transforming industries and applications in unprecedented ways. However, the computational demands of AI tasks require specialized hardware components to achieve their full potential. AI accelerators are purpose-built to enhance AI and ML performance, significantly boosting the speed and efficiency of AI workloads.

AI accelerators are specialized hardware components optimized for the unique computational requirements of AI tasks, such as deep learning and neural network processing. Unlike traditional processors, AI accelerators excel in managing massive datasets and complex calculations, making them indispensable in industries such as healthcare, finance, autonomous vehicles, and technology innovation.

Types of AI Accelerators

AI accelerators come in various forms, each designed to handle specific types of AI workloads. The most common ones are:

- Graphics Processing Units (GPUs): Initially designed for graphics rendering, GPUs excel at parallel processing, making them ideal for handling the large-scale computations required in AI applications. Their ability to perform many tasks simultaneously has made them a popular choice for AI applications.

- Tensor Processing Units (TPUs): Developed by Google, TPUs are specifically designed for tensor operations, a type of mathematical operation involving multi-dimensional arrays, which are fundamental to deep learning algorithms. TPUs offer unparalleled performance and efficiency for deep learning workloads.

- Field-Programmable Gate Arrays (FPGAs): These are configurable hardware components that can be programmed to perform specific tasks, offering a balance between performance and flexibility. FPGAs are ideal for applications that require a high degree of customization and adaptability.

- Application-Specific Integrated Circuits (ASICs): Custom-built for specific applications, ASICs provide the highest performance and efficiency for dedicated AI tasks. However, they lack the flexibility of GPUs and FPGAs, making them less suitable for applications that require adaptability.

| AI GPU Architecture | Type of GPU | Power (Watts) | Performance FP16 Tensor (Teraflops) | Networking Speeds (GB/s) | Performance/Power (Teraflops/Watts) |

|---|---|---|---|---|---|

| NVIDIA B200 | Discrete | 1000 | 1800 | 400 | 1.8 |

| NVIDIA B100 | Discrete | 700 | 2250 | 400 | 3.2 |

| INTEL GAUDI 3 | Discrete | 900 (Passive)/ 1200 (Active) | 1835 | 1200 | 2/1.5 |

| AMD INSTINCT MI300A | SoC/APU | 760 | 980 | 128 | 1.3 |

| AMD INSTINCT MI300X | Discrete | 750 | 1300 | 128 | 1.7 |

| AI Accelerators | Type | Package (mm x mm) | Company | Operating Temp (°C) | Power (W) | Performance |

|---|---|---|---|---|---|---|

| WSE-3 | AI Processor | 215 x 215 | Cerebras | 20 - 30 | 2300 | 125 Peta flops |

| AMD MI325X | AI Accelerator (MCM) | (1017 mm²) | AMD | 95 - 105 | 750 | 653 TFLOPS |

| Habana Gaudi 2 | ASIC | 61 x 61 | Intel | 100 - 110 | 350 | 400 TFLOPS |

| Google TPU v4 | ASIC | Custom | - | 192 | 275 TFLOPS | |

| C600 | AI Processor (IPU) | 267 x 111 | Graphcore | 10 - 55 | 185 | 280 TFLOPS |

| Versal AI Core | FPGA | 45 x 45 | AMD | 40 - 100 | 75 | 200 TOPS |

| Qualcomm Cloud AI 100 | ASIC | 237.9 x 111.2 | Qualcomm | 0 - 50 | 150 | 288 TOPS |

| TensTorrent Grayskull | AI Accelerator | 259.8 x 111.2 | Tenstorrent | 0 - 75 | 200 | 322 TFLOPS |

| SAKURA-II | BGA | 19 x 19 | Edge Cortix | -40 to 85 | 8 | 60 TOPS |

| Hailo-8 | BGA | 22 x 42 | Hailo | -40 to 85 | 2.5 | 26 TOPS |

| Hailo-8L | BGA | 22 x 42 | Hailo | -40 to 85 | 1.5 | 13 TOPS |

Servers

Rack servers are purpose-built to fit within a framework called a server rack, enabling efficient use of space and seamless scalability. The rack features multiple mounting slots, commonly referred to as rack units (U), where hardware units are secured. Rack server sizes range from 1U to 8U, allowing businesses to select configurations tailored to their needs.

Rack servers are the foundation of many modern data center architectures. They are designed for optimal performance, scalability, and efficient use of space. A rack server includes all the elements of a traditional server, including a motherboard, memory, CPU, power supply, and storage subsystem. However, they are packaged in a way that facilitates better cooling and easier management. Rack servers offer several advantages in a data center environment. Firstly, they are highly scalable. As your business grows, you can add more servers to the rack. Secondly, they make efficient use of space. By stacking servers vertically, you can fit a large amount of computing power into a small area. Lastly, rack servers are designed for easy maintenance. Components can be easily accessed and replaced without disturbing other servers.

Types of Rack Servers

There are several types of rack servers available, each with its own unique features and applications. Some of the most common types of rack servers include:

- Standard Server Racks: These are the most common type of rack server and are designed to hold multiple servers in a single rack.

- Specialty Server Racks: These racks are designed with specific equipment or applications in mind. For example, you might find a rack specifically designed for audio-visual equipment, network devices, or high-density server installations.

- Open-Frame Racks: These racks provide easy access to equipment and improved airflow, making them ideal for environments that require frequent changes to the server setup.

- Enclosed Racks: These racks offer added security and protection from dust and debris, making them suitable for environments where servers need to remain undisturbed for extended periods.

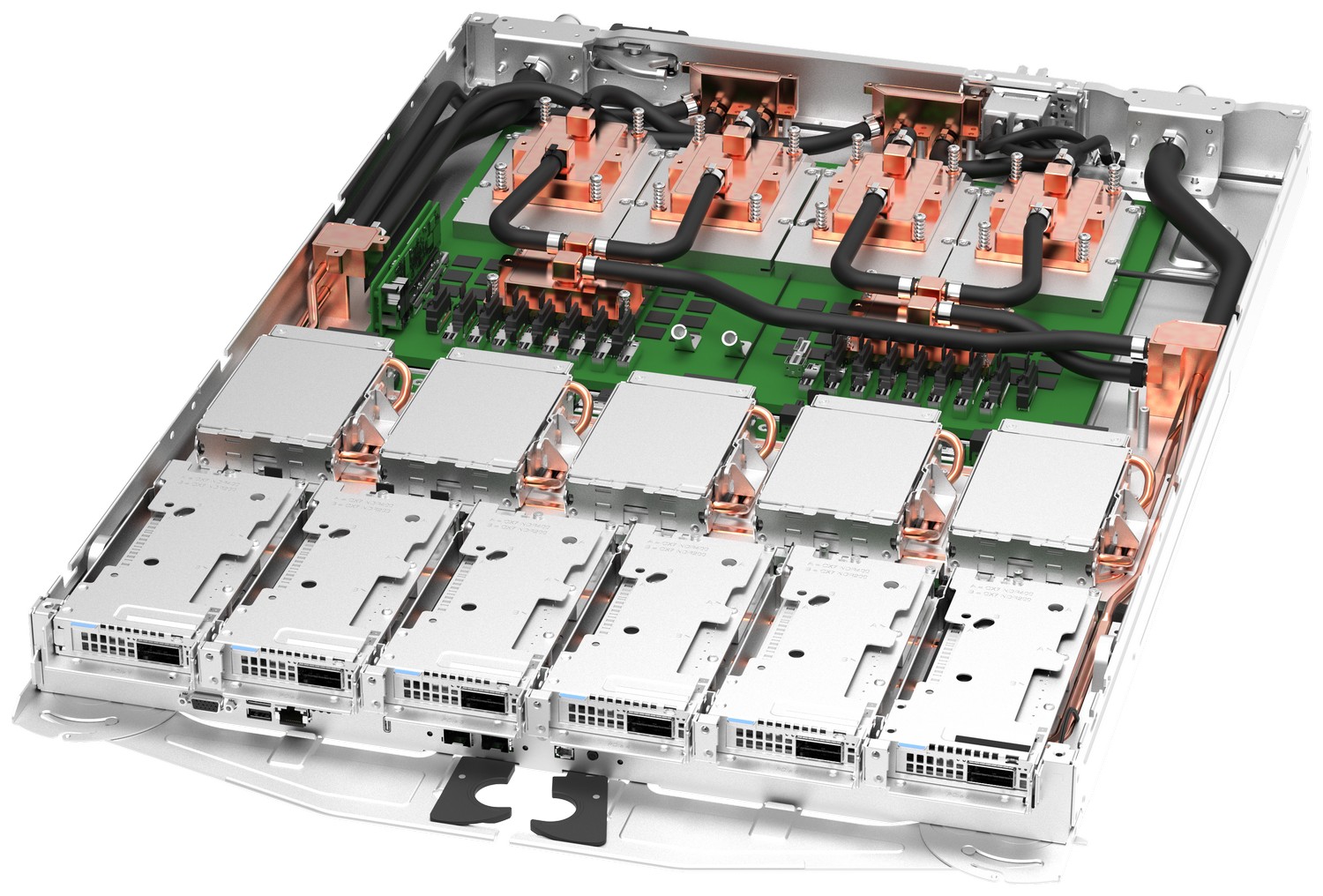

Maintaining proper airflow and cooling is essential for the performance and longevity of servers. Overheating can cause hardware failure and decrease the lifespan of your servers. Multicomponent liquid cooling involves cooling multiple components of a server, including the GPU, CPU, local storage, network fabric, and rack/cabinet. This comprehensive approach ensures that all heat-generating components are efficiently cooled, leading to improved performance and reliability. HPE’s 8-element cooling design, for instance, includes liquid cooling for all major components, resulting in a 90% reduction in cooling power consumption compared to traditional air-cooled systems.

| AI Accelerators | Type | Package (mm x mm) | Company | Operating Temp (°C) | Power (W) | Performance |

|---|---|---|---|---|---|---|

| WSE-3 | AI Processor | 215 x 215 | Cerebras | 20 – 30 | 2300 | 125 Peta flops |

| AMD MI325X | AI Accelerator (MCM) | 1017 mm² | AMD | 95 – 105 | 750 | 653 TFLOPS |

| Habana Gaudi 2 | ASIC | 61 x 61 | Intel | 100 – 110 | 350 | 400 TFLOPS |

| Google TPU v4 | ASIC | Custom | – | 192 | 275 TFLOPS | |

| C600 | AI Processor (IPU) | 267 x 111 | Graphcore | 10 – 55 | 185 | 280 TFLOPS |

| Versal AI Core | FPGA | 45 x 45 | AMD | 40 – 100 | 75 | 200 TOPS |

| Qualcomm Cloud AI 100 | ASIC | 237.9 x 111.2 | Qualcomm | 0 – 50 | 150 | 288 TOPS |

| TensTorrent Grayskull | AI Accelerator | 259.8 x 111.2 | TensTorrent | 0 – 75 | 200 | 322 TFLOPS |

| SAKURA-II | BGA | 19 x 19 | Edge Cortix | -40 to 85 | 8 | 60 TOPS |

| Hailo-8 | BGA | 22 x 42 | Hailo | -40 to 85 | 2.5 | 26 TOPS |

| Hailo-8L | BGA | 22 x 42 | Hailo | -40 to 85 | 1.5 | 13 TOPS |

Distribution, Redundancy, and Reliability

Distribution: Liquid cooling systems require careful planning for the distribution of coolant to ensure uniform cooling across all components. This involves the strategic placement of CDUs and CDMs.

Redundancy: Achieving N+1 redundancy at the row level necessitates using two pumps, CDUs, and cooling towers can help mitigate the impact of component failures. Centralizing all CDUs can optimize operations and enable faster scalability.

Reliability: Liquid cooling systems enhance the reliability of data centers by reducing the risk of overheating and extending the lifespan of components. Regular maintenance and monitoring are essential to ensure the system’s long-term performance.

Liquid Cooling Solutions for Enhanced Performance and Efficiency

To support large-scale AI workloads efficiently, organizations must adopt advanced cooling solutions. Direct liquid cooling stands out as the most effective option.

HPE (Hewlett Packard Enterprise) has established itself as a pioneer in liquid cooling technology, leveraging over 50 years of innovation and securing more than 300 patents in this field.

HPE’s liquid-cooled systems are designed to operate reliably for years, demonstrating clear advantages in sustainability. Within just the past two years, HPE introduced four of the world’s top ten fastest systems, all HPE Cray EX liquid-cooled supercomputers. This technology has also positioned HPE’s systems among the top performers on the Green500 list of energy-efficient supercomputers, underscoring the role of efficient cooling in high-performance computing.

An 8-element cooling design that encompasses liquid cooling for GPUs, CPUs, server blades, storage, network fabric, rack/cabinet, clusters, and the coolant distribution unit (CDU).

High-density, high-performance systems, complete with rigorous testing and monitoring software, supported by on-site services.

Integrated network fabric optimized for massive scale, reducing power use and costs.

Open architecture allows flexibility in accelerator choices.

This direct-liquid-cooling design delivers substantial benefits, such as a 37% reduction in cooling power per server blade, which decreases utility costs, cuts carbon emissions, and lowers data center noise. Additionally, it enables greater cabinet density, reducing floor space requirements by 50%.

Figure 9. [HPE in Liquid Cooling]

Figure 9. [HPE in Liquid Cooling]

For example, an HPC data center housing 10,000 liquid-cooled servers would emit just 1,200 tons of CO₂ annually, compared to over 8,700 tons from air-cooled servers, an 87% reduction. This efficiency translates into major cost savings, with liquid-cooled servers costing $45.99 per server per year versus $254.70 for air-cooled, saving about $2.1 million annually in energy costs. Furthermore, over five years, liquid cooling requires 14.9% less power for the chassis and yields 20.7% higher performance per kW than air cooling, making it a compelling solution for sustainable and economical AI infrastructure

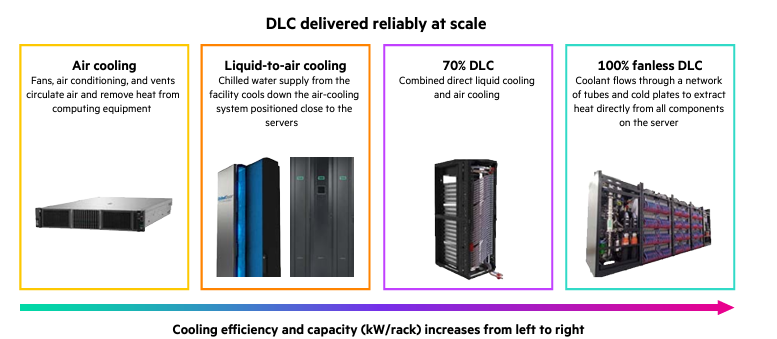

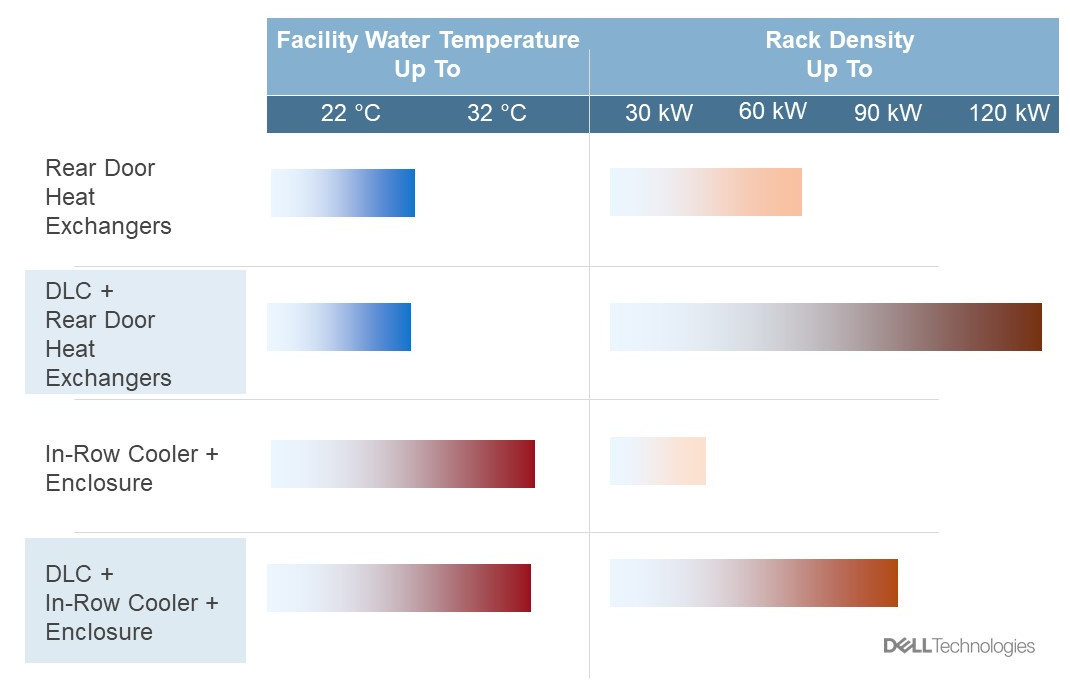

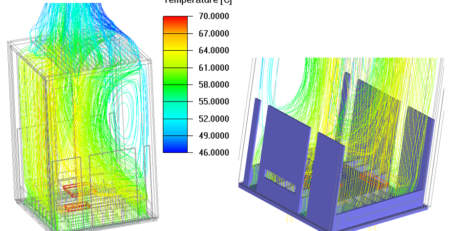

DELL Dell’s thermal engineering team has pioneered Dell Smart Cooling, a customer-focused suite of innovations that elevate cooling efficiency in high-performance server systems. Since introducing Triton, a liquid-cooled server product, in 2016, Dell has continually advanced its cooling solutions, now offering state-of-the-art systems like the Dell DLC3000 rack—used by Verne Global—and modular data centers with up to 115 kW per rack. Through the Smart Cooling initiative, Dell utilizes multi-vector cooling to optimize thermals and fan management across its entire product line. A key advantage of Dell’s approach is the integration of simulation-driven design, which enables thermal engineers to identify airflow improvements and increase thermal design power (TDP) without relying on time-intensive physical prototyping. The result is heightened accuracy and efficiency throughout product development, reducing time to market while optimizing performance.

Figure 10. [Dell Suggested Cooling Solution]

Figure 11. [Dell Cooling Services]

The PowerEdge XE9640, Dell’s latest 2U dual-socket server, exemplifies this commitment to liquid cooling, as it uses direct liquid cooling (DLC) to efficiently cool both CPUs and GPUs. This model supports a range of connectivity options, including four Intel Data Center Max GPUs interconnected with XeLink or four NVIDIA H100 SXM GPUs with NVLink, as well as standard PCIe cards. By using DLC, the XE9640 achieves twice the rack density of the air-cooled XE8640, minimizing the need for chilled air and offering significant data center space savings. This 42U or 48U rack solution includes cooling distribution units, rack manifolds, and installation services, which has already enabled institutions like Cambridge University to deploy the UK’s fastest AI supercomputer cluster.

Figure 12. [XE9640 The Internal Liquid Cooled Module]

LENOVO Lenovo’s latest innovations in liquid cooling, showcased in the ThinkSystem N1380 Neptune chassis, redefine data center capabilities by achieving 100% heat removal. This cutting-edge design allows for 100kW+ server racks to operate without the need for specialized air conditioning, significantly enhancing efficiency for high-performance environments. The ThinkSystem SC777 V4 Neptune server also drives this transformation by introducing the NVIDIA Blackwell platform to enterprises, bolstering capabilities in AI training, complex data processing, and simulation tasks.

Figure 13. [Lenovo Neptune N1380]

Lenovo has brought supercomputing within reach for organizations of all sizes, offering scalable solutions that support advanced generative AI with reduced data center power demands by up to 40%. The ThinkSystem N1380 Neptune, part of Lenovo’s 6th generation liquid cooling technology, optimizes accelerated computing operations across its partner ecosystem, pushing performance limits for next-generation AI models in compact, efficient data centers.

Key Features of Lenovo’s ThinkSystem N1380 Neptune

Maximized Standard Form Factor: The N1380 provides additional space while staying within data center standard dimensions, making it scalable and accessible for enterprises at any scale, from small businesses to global organizations.

Significantly Lower Power Consumption: By eliminating the need for internal airflow and fans, this design reduces power consumption by up to 40% compared to similar air-cooled systems, and rack insulation minimizes radiant heat emissions.

Advanced Power Conversion: Each N1380 houses up to four 15kW ThinkSystem Titanium Power Conversion Stations (PCS), which deliver power to a 48V busbar. This integrated approach to power conversion, rectification, and distribution replaces the need for multiple, separate units, resulting in unmatched efficiency.

Innovative Cooling Mechanism: The N1380 features a proprietary manifold with Lenovo’s patented blind-mate connectors and aerospace-grade dripless technology, ensuring reliable waterflow distribution for safe and effective cooling. This system enables seamless connections to compute trays, maintaining continuous operation.

100% Heat Removal: Lenovo’s Neptune water-cooling system allows complete heat extraction, leveraging the design’s enhanced safety features to manage higher temperatures while maximizing energy efficiency.

Energy Repurposing: Neptune operates efficiently at water inlet temperatures up to 45°C, which eliminates additional chilling requirements. The residual heat can also be repurposed for building heating or adsorption chilling for cold water generation, aligning with green energy goals.

With the ThinkSystem N1380 Neptune and the SC777 V4 Neptune, Lenovo is driving a new era in data center design, where the highest-performing AI, machine learning, and computational capabilities are housed in power-optimized, space-efficient, and environmentally responsible systems.

Figure 14. [ThinkSystem SC777 V4 with the NVIDIA GB200 Platform.]

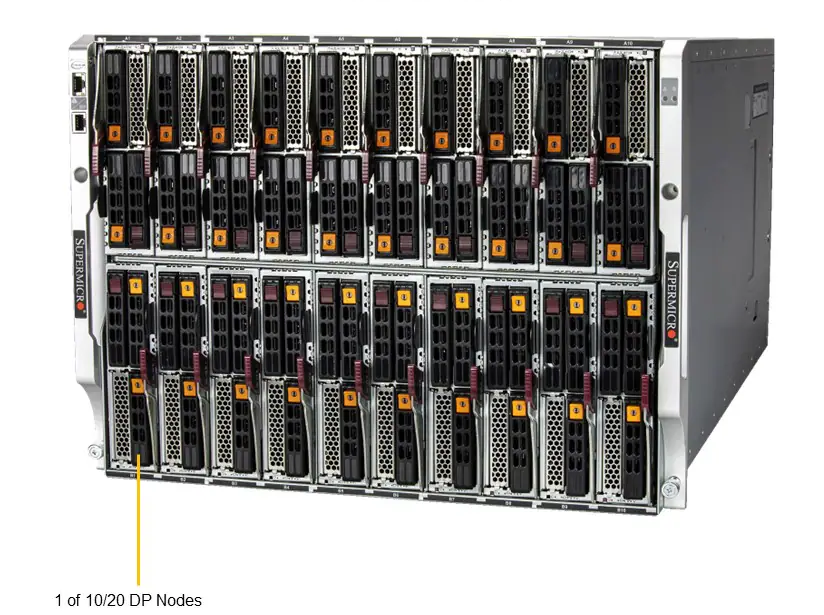

SUPERMICRO Supermicro offers a comprehensive, rack-scale liquid-cooling solution spanning hardware and software, providing a fully integrated and tested package including servers, racks, networking, and liquid-cooling infrastructure. This end-to-end approach accelerates deployment timelines and enhances the overall quality and reliability of data center infrastructure.

GPU Systems

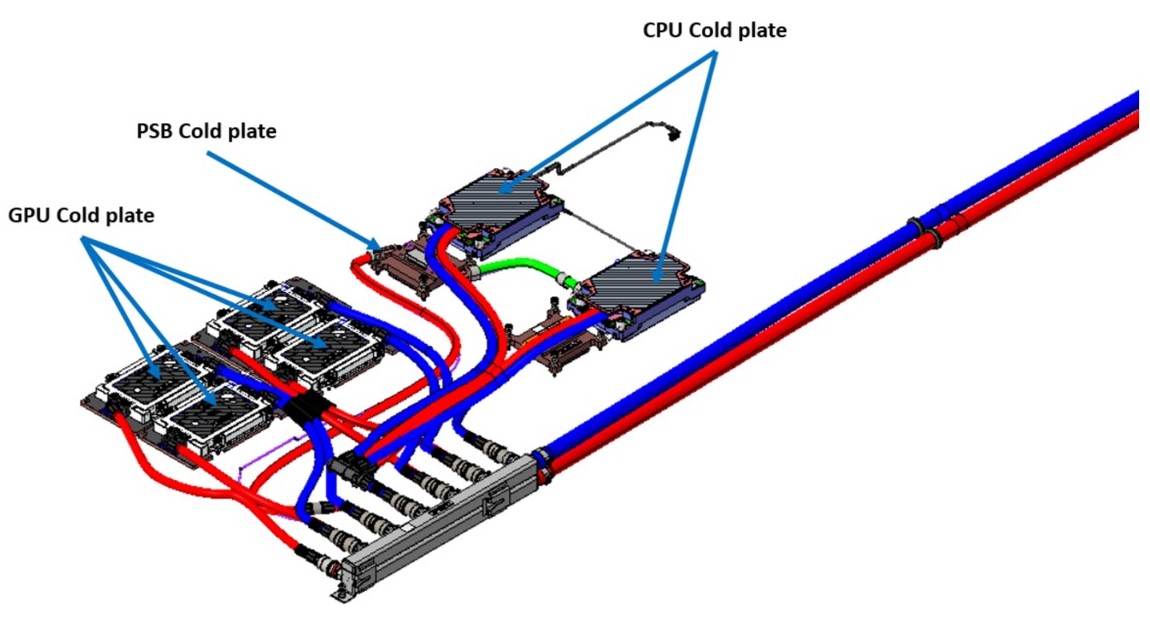

Supermicro’s GPU systems lead in AI, machine learning, and high-performance computing (HPC), combining advanced processors, DDR5 memory, and NVIDIA® GPUs. Available in 2U, 4U, or 8U configurations, these systems support up to eight NVIDIA® H100 GPUs, equipped with NVLink® and NVSwitch for optimized GPU communication. Powered by the latest Intel Xeon or AMD EPYC™ processors, the systems offer impressive memory capacity with up to 32 DIMMs of DDR5, creating a powerful, compact AI and HPC platform. Direct-to-Chip (D2C) coolers are integrated across processors and GPUs, linked through Coolant Distribution Modules (CDM) to the Liquid Cooling Distribution Unit (CDU) for efficient cooling.

Figure 15. [GPU SuperServer SYS-421GE-TNHR2-LCC]

BigTwin® Designed for high-performance applications, the BigTwin system is a 2U multi-node platform supporting four nodes, each equipped with dual 4th Gen Intel® Xeon® Scalable processors, up to 16 DIMMs of DDR5 memory, and support for high-speed NVMe drives. Networking options, including 10GbE to 200 Gb HDR InfiniBand, enhance the system’s versatility. Shared power and cooling reduce resource requirements, while the D2C cooling on processors integrates seamlessly with the CDU for optimized heat management.

Figure 16. [BigTwin SuperServer SYS-222BT-HNC8R]

SuperBlade For high-density, energy-efficient computing, SuperBlade supports up to 20 blade servers within an 8U chassis. With support for the latest Intel and AMD processors and advanced networking options like 200G HDR InfiniBand, SuperBlade is engineered for power efficiency, node density, and TCO optimization, making it ideal for today’s power-conscious data centers. Each processor utilizes D2C cooling, routed through the CDM loop to the CDU for stable, effective cooling.

Figure 17. [Blade SBI-421E-1T3N]

Hyper The Hyper product line delivers the next level of performance in 1U or 2U rackmount servers, optimized for storage and I/O versatility. With up to 32 DIMM slots, Hyper systems are designed to support the most demanding workloads. This family also integrates high-capacity cooling to accommodate top-tier CPUs, ensuring peak computer performance across diverse application requirements.

Figure 18. [Hyper SuperServer SYS-221H-TNR]

Supermicro offers energy efficient and versatile liquid cooling tower solutions to be integrated for liquid cooling solutions. By providing fully tested, reliable, and scalable solutions, Supermicro’s liquid-cooling systems address the modern data center’s growing demands, offering flexibility and efficiency to support today’s advanced computing needs.

Challenges and Considerations for Liquid Cooling

- Initial Cost: Liquid cooling systems can have higher initial costs compared to air cooling systems.

- Complexity: Liquid cooling systems are generally more complex than air cooling systems, requiring specialized knowledge and expertise.

- Maintenance: Regular maintenance and monitoring are essential for ensuring the long-term reliability and efficiency of liquid cooling systems.

- Coolant Selection: The choice of coolant can significantly impact the system’s performance and environmental impact. Factors to consider include heat capacity, toxicity, and flammability.

Advancements in Liquid Cooling

- Immersion Cooling: Immersion cooling involves submerging the entire server or rack in a liquid coolant. This method can provide extremely efficient cooling for high-density environments.

- Two-Phase Liquid Cooling: Two-phase liquid cooling utilizes a coolant that undergoes a phase change (from liquid to gas) to absorb heat. This can provide even more efficient cooling than single-phase systems.

- Hybrid Cooling: Hybrid cooling systems combine liquid and air cooling to optimize efficiency and flexibility.

- Coolant Efficiency: Research is ongoing to develop more efficient and environmentally friendly coolants for liquid cooling systems.

Future Trends

As the demand for data centers continues to grow, so does the need for efficient and sustainable cooling solutions. Liquid cooling is expected to play a significant role in meeting this demand, driven by the following trends:

- Two Phase Cooling: As of 2024, single-phase direct-to-chip (D2C) cooling dominates the high-end GPU thermal management market. However, with the increasing thermal design power (TDP), two-phase D2C cooling will be required, and it is expected to come in large volume no earlier than 2026 and 2027.

- Growing demand for data centers: Power consumption by the U.S. data center market is forecasted to reach 35 gigawatts (GW) by 2030, with demand being measured by power consumption to reflect the number of servers in a data center. This growth is driven by the increasing digital footprint, AI, big data, and high-performance computing.

- Increasing power density: As data centers grow, so does the power density, leading to a need for more efficient cooling solutions. Liquid cooling is well-positioned to meet this need, offering a more efficient and sustainable solution for data center cooling.

- Sustainability and regulations: With the growing pressure to make data centers sustainable, regulators and governments are beginning to impose sustainability standards on newly built data centers.

- Heat recovery and reuse: Data center builders are looking for new types of cooling that can manage not only the increased amount of cooling needed but also to meet new regulations for data center heat recovery and reuse.

- Increased adoption of liquid cooling: As data centers continue to grow, liquid cooling is expected to become a more popular choice for data center cooling, driven by its efficiency, scalability, and sustainability.

- Advancements in liquid cooling technology: The development of new liquid cooling technologies, such as immersion cooling and direct-to-chip cooling, is expected to continue, offering even more efficient and effective cooling solutions for data centers.

- Growing importance of data center efficiency: As data centers continue to grow, the importance of efficiency will become even more critical. Liquid cooling is well positioned to meet this need, offering a more efficient and sustainable solution for data center cooling.

Emerging technologies and research.

ExxonMobil and Intel are working to design, test, research and develop new liquid cooling technologies to optimize data center performance and help customers meet their sustainability goals. Liquid cooling solutions serve as an alternative to traditional air-cooling methods in data centers.

Pros and Cons of Liquid Cooling

Pros:

- Higher Efficiency: Liquid cooling is more effective at transferring heat away from components.

- Energy Savings: Reduces the overall energy consumption of the data center.

- Increased Density: Allows for higher server density in a smaller footprint.

- Noise Reduction: Liquid cooling systems are generally quieter than air-cooled systems.

Cons:

- Higher Initial Cost: The upfront cost of liquid cooling systems can be higher than air-cooled systems.

- Complex Installation: Requires specialized knowledge and infrastructure.

- Maintenance: Regular maintenance is necessary to prevent leaks and ensure system integrity.

- Compatibility: Not all components are compatible with liquid cooling, which can limit flexibility.

Case study

Server and Thermal Management Information

| 1U SERVER | |

| MODEL | ARS-111GL-NHR |

| GPU | NVIDIA: H100 Tensor Core GPU |

| GPU TDP (W) | 700 |

| NODE | 1 |

| MOTHERBOARD | MBD-G15MH-G |

| CPU | GH200 Grace Hopper™ Superchip |

| CPU TDP (W) | 300 |

| MAX (CPU+GPU) TDP (W) | 2000 |

| OPERATING ENVIRONMENT | 10-35 °C |

| ACCEPTABLE JUNCTION TEMP (°C) | <95°C |

Table 4. 1U Server

| AIR COOLING | |

| FAN SIZE (mm) | 40 × 40 × 56 |

| NOISE LEVEL (DBA) | 70 |

| AIRFLOW (CFM) | 32.9 |

| MAX STATIC PRESSURE (In WC) | 6.8 |

Table 5. Air Cooling

| HEAT SINK DETAILS | |

| LENGTH (mm) | 90 |

| WIDTH (mm) | 150 |

| HEIGHT (mm) | 35 |

| FIN THICKNESS (mm) | 0.3 |

| BASE THICKNESS (mm) | 1.5 |

| NO OF FINS | 93 |

| MATERIAL | Copper |

Table 6. Heat Sink Details

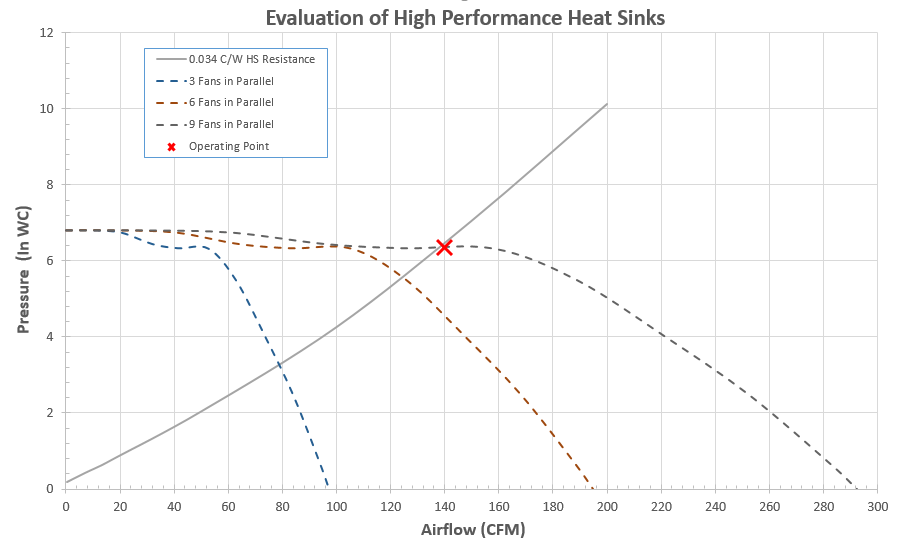

Figure 22. Evaluation of Heat Sinks

In a recent thermal analysis case study aimed at identifying the optimal air-cooling solution for the Grace Hopper Superchip, a high-performance 9-fin heat sink was evaluated for its effectiveness in dissipating a substantial 1000W heat load within a single-node setup. Without implementing power mapping, this heat sink, specifically designed for GPU and CPU cooling, demonstrated a theoretical thermal resistance of 0.034°C/W, indicating its potential for adequate cooling in this configuration.

Air-Cooling System with High-Performance Fans To meet the thermal performance requirements, a system of nine high-performance fans is incorporated, delivering a total airflow of approximately 140 CFM at a power consumption of 272W. With this configuration, the junction temperature across the die is projected to reach 93°C, achieving the necessary thermal threshold.

The sound level of 82 dBA generated by this fan setup is notable, as it could pose challenges in data center environments where noise control is a priority. This aspect underscores the potential limitations of such high-performance air-cooling configurations in noise-sensitive applications.

Scalability Challenges in Two-Node Server Environments For a dual-node server configuration handling a combined heat load of 2000W, this air-cooling setup with high-performance fans proves insufficient. Not only would the cooling requirements exceed the capabilities of the current setup, but also the noise levels would escalate beyond acceptable limits. This limitation highlights the reduced efficiency and practicality of scaling air-cooling solutions for higher power densities, suggesting a need to explore alternative thermal management approaches for multi-node configurations.

This case study provides valuable insights into the operational boundaries of air-cooling systems for high-power applications, especially in data center environments where both thermal and acoustic performance are critical.

Advantages of Liquid Cooling and the Role of Power Mapping Given the limitations of air-cooling in this high-heat scenario, liquid cooling provides a much more efficient and consistent solution. By removing heat directly at its source, liquid cooling can operate effectively for power-dense configurations, such as those exceeding 1000W per node. This allows for:

Better Temperature Control: Liquid cooling can maintain component temperatures below critical limits (e.g., below 95°C) without the noise and airflow requirements of multiple fans.

Significant Energy Savings: Liquid cooling can offer an energy saving of 50-55% due to its efficiency in transferring and dissipating heat.

With power mapping incorporated into this analysis, a detailed breakdown of heat sources could inform liquid cooling designs tailored to the actual heat distribution, maximizing cooling where it is needed most.

Power mapping is a critical aspect of thermal analysis that can help visualize and quantify how heat is generated and distributed within a system. The power map (surface power density distribution) has a tight relationship with the temperature distribution. This process involves highlighting areas that produce the most heat. By pinpointing these high-power areas, power mapping enables a more accurate simulation of thermal performance, allowing for the assessment of specific cooling requirements and a better understanding of how heat accumulates across different regions within a system.

Incorporating power mapping into thermal analysis offers several benefits. It identifies hot spots, which can help optimize cooling solutions for critical areas. This tailored approach not only improves cooling efficiency but also helps reduce overall power consumption. Additionally, by ensuring that components operate within safe temperature limits, power mapping can extend the lifespan of parts, preventing overheating and minimizing wear. Although power mapping was not initially considered in this evaluation, its integration is essential for comprehensive thermal analysis and effective cooling design.

Thermal Management Companies:

Several companies are at the forefront of providing innovative liquid cooling solutions for data centers and high-performance computing systems. Here are some of the key players:

CoolIT Systems

CoolIT Systems is a leading provider of direct liquid cooling solutions for data centers and high-performance computing. They offer a range of products, including:

Direct-to-Chip Liquid Cooling: CoolIT’s Direct Liquid Cooling (DLC) technology provides precise temperature control for high-performance computing components like GPUs and CPUs.

Cold Plates and Heat Sinks: They offer a variety of cold plate designs to meet specific cooling requirements, including immersion cooling and single-phase liquid cooling.

Distribution Units (CDUs): CoolIT’s CDUs manage the flow of coolant throughout the system, ensuring efficient heat dissipation.

Motivair

Motivair specializes in advanced thermal solutions for data centers and high-performance computing. Their product offerings include:

Liquid-to-Air Heat Exchangers: These heat exchangers efficiently transfer heat from the liquid coolant to the ambient air.

Cold Plates and Heat Sinks: Motivair provides a wide range of cold plate and heat sink solutions for various applications.

Pump and Valve Systems: They offer high-performance pumps and valves for circulating the coolant.

Vertiv

Vertiv offers a comprehensive suite of liquid cooling solutions and services tailored to meet the demands of high-density computing environments, such as those required for artificial intelligence (AI) and high-performance computing (HPC) applications. Their portfolio includes:

Coolant Distribution Units (CDUs): Vertiv™ CoolChip CDU 2300kW- A liquid-to-liquid system providing up to 2.3 MW of cooling capacity. Its compact design allows placement within the data center row or in a mechanical gallery, catering to hyperscalers and colocation providers deploying large-scale liquid cooling solutions.

Liebert® XDU Coolant Distribution Units- Designed to support liquid cooling in high-density environments, these units are suitable for direct-to-chip and rear door-cooling applications. They facilitate easy, cost-effective deployment without the need for facility water, enabling efficient support for higher rack densities.

Immersion Cooling Solutions: Vertiv™ Liebert® VIC Immersion Cooling Tank: This solution immerses IT equipment in a dielectric fluid, directly addressing heat at its source. It enhances performance and efficiency while minimizing operational costs, making it ideal for high-density data center applications.

Hybrid Cooling Systems: Combination Liquid and Air-Cooling Systems: In collaboration with Compass Datacenters, Vertiv has developed integrated systems that combine liquid and air-cooling technologies. These systems accommodate mixed cooling needs, facilitating the deployment of AI applications and supporting rapidly evolving data center environments.

LIQUIDSTACK

LiquidStack is a provider of advanced liquid cooling solutions designed to meet the most challenging thermal management needs in data centers and High-Performance Computing (HPC) environments.

Solutions Offered:

Direct-to-Chip Coolant Distribution Units (CDUs): These systems provide up to 1,350kW of cooling capacity at N+1 redundancy. They target CPUs and GPUs, transferring 80% of their heat to water, achieving a partial Power Usage Effectiveness (pPUE) of 1.16. This approach reduces fan power and water consumption, significantly decreasing data center whitespace and improving both PUE and Water Usage Effectiveness (WUE).

Single-Phase Immersion Cooling: Offering up to 110kW of cooling capacity in less than four rack spaces, this solution eliminates the need for fans, resulting in a pPUE of 1.06. It reduces energy consumption and noise while improving PUE and WUE. The cooling fluids used can capture all IT system heat and provide elevated water temperatures.

Two-Phase Immersion Cooling: This technology delivers up to 252kW of cooling capacity in a single tank. By utilizing fluid vaporization, it extracts more heat than any other liquid cooling technology, achieving a pPUE as low as 1.03. It significantly reduces data center footprint, eliminates water consumption, pumps, and fans, leading to downsized heat rejection systems and lower PUE, WUE, and Scope 2 emissions.

Prefabricated Modular Solutions: LiquidStack’s MicroModular™ and MegaModular™ systems offer efficient liquid cooling in compact modular containers, providing scalable and sustainable cooling solutions for edge and micro data centers. By integrating these solutions, LiquidStack enables data centers to cool the most demanding workloads, maximize energy efficiency, reduce environmental impact, and capitalize on energy reuse opportunities.

JETCOOL

JetCool offers a range of innovative products designed to efficiently cool high-power electronics. Their offerings include:

Microconvective Liquid Cooling Technology: JetCool’s patented microconvective cooling® technology utilizes arrays of small fluid jets that precisely target hot spots on processors, significantly enhancing cooling performance at the chip level. This approach reduces thermal resistance and eliminates the need for thermal pastes and interface materials, enabling superior heat transfer and improved device efficiency.

SmartPlate System: A self-contained, plug-and-play liquid cooling solution for servers, the SmartPlate System integrates seamlessly into various server form factors to meet diverse AI and high-performance computing (HPC) requirements. It supports rack densities up to 50kW without necessitating a coolant distribution unit (CDU), achieving average server power savings of 15% and reducing server noise by 13 dBA.

SmartPlate Cold Plates: These fully sealed cold plates are engineered to cool the industry’s highest power AI devices, handling thermal design power (TDP) exceeding 3,000W. By utilizing inlet coolant temperatures above 50°C, SmartPlates enables facilities to eliminate the need for chillers and cooling towers, thereby maximizing computer performance and power efficiency.

SmartLid Liquid-to-Die Cooling: Designed for semiconductor manufacturers and chipmakers, SmartLid brings fluid directly to the processing chip, eliminating all thermal pastes and interface materials. This solution supports over 2,000W in a single socket and offers a heat transfer coefficient ten times greater than traditional methods, making it ideal for devices aiming to be more powerful, efficient, and compact.

Asetek

Asetek is a pioneer in liquid cooling technology, providing advanced thermal management solutions for data centers, high-performance computing (HPC), and gaming systems. Asetek’s offerings include:

Direct-to-Chip (D2C) Liquid Cooling: Asetek’s D2C cooling technology provides direct cooling to high-power components like CPUs, GPUs, and ASICs. By circulating liquid through custom cold plates, the system efficiently transfers heat away from key components, enhancing system performance and reducing reliance on air-based cooling.

Rack-Level Liquid Cooling Solutions: Asetek’s rack cooling systems support high-density server environments, offering scalable solutions that maintain consistent performance. By using liquid-to-liquid (L2L) or liquid-to-air (L2A) configurations, these systems are tailored to handle power-dense workloads in AI, ML, and HPC environments.

Coolant Distribution Units (CDUs): Asetek’s CDUs manage the distribution of coolant within data center liquid cooling systems. They ensure stable coolant flow, optimal temperatures, and pressure control, enabling seamless integration with existing server and data center infrastructure.

Liquid-Cooled GPUs and CPUs: Asetek provides integrated cooling solutions for CPUs and GPUs used in data centers, gaming, and high-performance computing. These systems reduce thermal resistance, improve overclocking potential, and deliver quieter, more energy-efficient cooling compared to traditional air-based solutions.

Hybrid Cooling Solutions: Asetek’s hybrid cooling systems offer a combination of liquid and air cooling for maximum energy efficiency and flexibility. By blending the benefits of liquid cooling with traditional air-based methods, hybrid solutions provide a balanced approach to thermal management.

Boyd Corporation

Boyd Corporation is a global leader in thermal management solutions, providing a diverse range of products. Their offerings include:

Thermal Interface Materials (TIMs): TIMs are essential for effective heat transfer between components. Boyd offers a variety of TIMs, including thermal pads, thermal greases, and phase-change interfaces.

Cold Plates and Heat Sinks: They design and manufacture customized cold plates, and heat sinks to meet specific cooling requirements.

Distribution Units (CDUs): They provide standalone CDUs and TCUs for the thermal requirements of the datacenters.

CDU Suppliers:

| Supplier | Product Line | Model name & Cooling Capacity | Details | Link |

| kW | ||||

| Delta | L2L | DHS-X430175-01 – 4U: 100 | -2+1 pumps | https://www.deltaww.com/en-US/products/03040202/16683 |

| L2A | FHS-X440350-01 -8U: 14.5 | -1+1 pumps -5-30 LPM | https://www.deltaww.com/en-US/products/03040202/16684 | |

| Boyd | L2L | ROL1100-48U32: 550-1100 | -473 LPM @ 2.1 Bar -Dimensions: 800 mm x 1205 mm x 2147 mm | https://www.boydcorp.com/thermal/liquid-cooling-systems/coolant-distribution-unit-cdu.html |

| RAL110-04U19: 50-110 | -90 LPM @ 0.7 bar -Dimensions: 450 mm x 966 mm x 175 mm | |||

| L2A | RAA32-10U21: 16-32 | -60LPM @ 0.8 bar -Dimensions: 538 mm x 982 mm x 440 mm | ||

| RAA15-10U19: 6.5-15 | -45LPM @ 0.7 bar -Dimensions: 538 mm x 982 mm x 440 mm | |||

| Cool IT Systems | L2L | CHx500 CDU: 500 | -3 pumps (N+1) Two A+B redundant, hot-swappable power supplies. -Integrated control and monitoring system (SSH, SNMP, TCP/IP, Modbus and others) -11U height, allowing 4 CDU units + 3U coolant reservoir in single 48U rack. | https://www.coolitsystems.com/product/chx500-cdu/ |

| L2L | CHx750 CDU: 750 | -50 micron filtration -900 servers -Power Consumption: 750 kW | https://www.coolitsystems.com/product/chx750-cdu/ | |

| L2L | CHx200 CDU: 200 | -4U rack mount Chassis -200 servers -Power Consumption: 2.4 kW | https://www.coolitsystems.com/product/chx200-cdu/ | |

| L2L | CHx80 CDU: 80 | -4U rack mount Chassis -100 servers/rack -Power Consumption: 0.652 kW | https://www.coolitsystems.com/product/chx80v2-cdu/ | |

| L2A | AHx10 CDU: 7-10 | -Compact 5U unit mounts directly into the rack -Power Consumption: 0.75 kW | https://www.coolitsystems.com/product/ahx10-cdu/ | |

| L2A | AHx2 CDU: 2 | -50 micron filtration -4 servers -Power Consumption: 0.15 kW | https://www.coolitsystems.com/product/ahx2-cdu/ | |

| L2A | AHx180 CDU: 180 | -2 pumps (2N) -270 LPM @ 40 PSI -4 fans (N+1) -Group control: 20 units | https://www.coolitsystems.com/product/ahx180-cdu/ | |

| L2A | AHx240 CDU: 240 | -2 pumps (2N) -270 LPM @ 40 PSI -8 fans (N+1) -Group control: 20 units | https://www.coolitsystems.com/product/ahx240-cdu/ | |

| L2A | AHx100 CDU: 100 | -80 to 100 servers -Power Consumption: 1.9 kW | https://www.coolitsystems.com/product/ahx100-cdu-1/ | |

| Motivair | L2L | MCDU60: 2350 | -800 GPM @ 32 Psi -2 pumps -37” x 17-3/4” x 7” -<72dBA |

|

| L2L | MCDU50: 1725 | -420 GPM @ 30 Psi -2 pumps – 63” x 48-1/8” x 98-3/8” -<75dBA | ||

| L2L | MCDU40: 1250 | -280 GPM @ 32 Psi -2 pumps – 60-1/4” x 35-1/2” x 80-1/4” -<70dBA | ||

| L2L | MCDU30: 860 | -180 GPM @ 37 Psi -2 pumps – 48” x 30” x 80-1/4” -<68dBA | ||

| L2L | MCDU25: 625 | -185 GPM @ 37 Psi -2 pumps – 42-1/2” x 31-1/2” x 73-5/8” -<68dBA | ||

| L2L | MCDU4U: 105 | -30 GPM @ 15 Psi -2 pumps -37” x 17-3/4” x 7” -<55dBA | ||

| Advanced Thermal Solutions, Inc. | L2L | iCDM: 10-20 | -22 LPM @ 100 PSI -Dimensions: 550 x 800 x 574.5 mm -Power Consumption: 2.3 kW | https://www.qats.com/Products/Liquid-Cooling/iCDM |

| ATTOM | RDHx | Smooth Air: 30 | – Adaptable to all height types of Racks – Green refrigerant R134A – DX based design | https://attom.tech/smoothair-rear-door-heat-exchanger/ |

| L2L | Bytecool: 250-400 | -Standard 19 inch rack | https://attom.tech/bytecool-d2c-liquid-cooling/ | |

| Single phase Immersion Cooling | OceanCool: 50/tank | -Colorless, odorless, and nontoxic coolant -PUE: 0.03 | https://attom.tech/oceancool-immersion-liquid-cooling/ | |

| nVent SCHROFF | L2L | Rackchiller CDU40: 40 | -4U -3 pumps (N+1) – Power: 0.97 kW -80 lpm -Max pressure: 232 psi | https://schroff.nvent.com/en-us/solutions/schroff/applications/coolant-distribution-unit |

| L2L | Rackchiller CDU40: 125 | -4U -Dimensions: 950 x 430 x 177 mm -2 pumps (N+1) – Power: 2.5 kW -170 lpm -Max pressure: 232 psi | https://schroff.nvent.com/en-us/solutions/schroff/applications/coolant-distribution-unit | |

| L2L | Rackchiller CDU800: 800+ | -Dimensions: 1194 x 787 x 2210 mm – Power: 22.2 kW -1200 lpm -Max pressure: 150 psi -68 dBA | https://www.nvent.com/en-in/hoffman/products/rackchiller-cdu800-coolant-distribution-unit-0 | |

| Coolcentric | RDHx | CD6C: 305 | – supports up to 26 RDHx with a 10kW load density -600 x 1000 mm -5U high -Intelligent monitoring through SNMP or MODBUS | https://coolcentric.com/coolant-distribution-units/ |

| Lneya | L2A | ZLFQ-15: 15 | -10-25 lpm – Storage Volume: 15L -Power: 1 kW | https://www.lneya.com/products/coolant-distribution-unit-cdu-chiller.html |

| L2A | ZLFQ-25: 25 | -25-50 lpm – Storage Volume: 30L -Power: 1.5 kW | ||

| L2A | ZLFQ-50: 50 | -40-110 lpm – Storage Volume: 60L -Power: 3 kW | ||

| L2A | ZLFQ-75: 75 | -70-150 lpm – Storage Volume: 100L -Power: 4 kW | ||

| L2A | ZLFQ-100: 100 | -150-250 lpm – Storage Volume: 150L -Power: 5 kW | ||

| L2A | ZLFQ-150: 150 | -200-400 lpm – Storage Volume: 200L -Power: 6 kW | ||

| L2L | ZLFQ-200: 200 | -250-500 lpm – Storage Volume: 250L | https://www.lneya.com/products/coolant-distribution-unit-cdu-chiller.html | |

| L2L | ZLFQ-250: 250 | -333-583 lpm – Storage Volume: 300L | ||

| L2L | ZLFQ-300: 300 | -416-667 lpm – Storage Volume: 600L | ||

| L2L | ZLFQ-400: 400 | -500-1000 lpm – Storage Volume: 1000L | ||

| L2L | ZLFQ-500: 500 | -667-1667 lpm – Storage Volume: 1200L | ||

| DCX | L2L | CDU3-CDU50: 100-5000 | -10-30 server racks | https://dcx.eu/coolant-distribution-units/ |

Vertiv

| L2L | Liebert XDU 450: 453 | -132 gpm max Flow, Max power Consumption: 7.3kW |

|

| L2L | Liebert XDU 1350: 1368 | -475.5 gpm max Flow, Max power Consumption: 20.5 kW | ||

| L2L | Cool Chip CDU: 600 | -238 gpm max flow, Max power consumption: 4.5kW | https://www.vertiv.com/en-ca/products-catalog/thermal-management/high-density-solutions/vertiv-coolchip-cdu/ |

Table 7. CDU Suppliers

| Suppliers | Link |

|---|---|

| Jet Cool | https://jetcool.com/smartplate-cold-plate/ |

| Advance Cooling Technologies (ACT) | https://www.1-act.com/thermal-solutions/passive/custom-cold-plates/ |

| DCX | https://dcx.eu/direct-liquid-cooling/processor-coldplates/ |

| ThermoElectric Cooling America Corporation | https://www.thermoelectric.com/cold-plates/ |

| ThermAvant Technologies | https://www.thermavant.com/cold-plates/ |

| ThermoCool | https://thermocoolcorp.com/project/cold-plates/ |

| Cofan USA | https://www.cofanthermal.com/cold-plates/ |

| Mikros Technologies (Jabil Acquired) | https://www.mikrostechnologies.com/ |

| Wieland Microcool | https://www.microcooling.com/our-products/cold-plate-products/4000-series-standard-cold-plates/ |

| Cool IT | https://www.coolitsystems.com/products/ |

| Motivair | https://www.motivaircorp.com/products/dynamic-cold-plates/ |

| Yinlun TDI LLC | Yinlun TDI LLC | Thermal Management Solutions |

| TAT Technologies | Thermal Management – TAT Technologies |

Table 8. Cold Plate Suppliers

Conclusion

Liquid cooling is becoming an essential technology for modern data center infrastructures, especially those supporting AI and HPC workloads. The integration of advanced cooling components, such as CDUs and cold plates, along with comprehensive management software, is enabling data centers to achieve higher efficiency, reliability, and performance. As the demand for high-performance computing continues to grow, liquid cooling will play a crucial role in meeting the cooling needs of the next generation of data centers.

Adopting liquid cooling solutions allows data center operators to meet the increasing thermal demands of AI-driven workloads while contributing to their sustainability goals. These systems enhance energy efficiency, support denser computing environments, and reduce operational costs, making them a vital part of the industry’s future.

We’d love to hear your thoughts and experiences with liquid cooling! Share your stories in the comments below or reach out to us for more information.

Leave a Reply